I am a Staff Research Scientist at DeepMind on the Agency team and an Honorary Fellow at the University of Edinburgh.

I am fortunate to work with wonderful students at the University of Edinburgh, where I help support the MARBLE group.

If you are interested in working together at Google DeepMind, see open roles here. Unfortunately, I do not have any current openings for direct reports or interns.

My research focuses on understanding the foundations of agency, learning, and computation.

I tend to get excited by fundamental questions, philosophical depth, and clarity. I typically work with the reinforcement learning problem, drawing on tools and perspectives from across philosophy, math, and computer science.

I am currently interested in developing the scientific bedrock of agency. Previously, I studied the limits of reward as a mechanism for capturing goals (2021, 2022, 2023). Before that, my dissertation studied how agents model the worlds they inhabit, focusing on the representational practices that underly effective learning and planning.

|

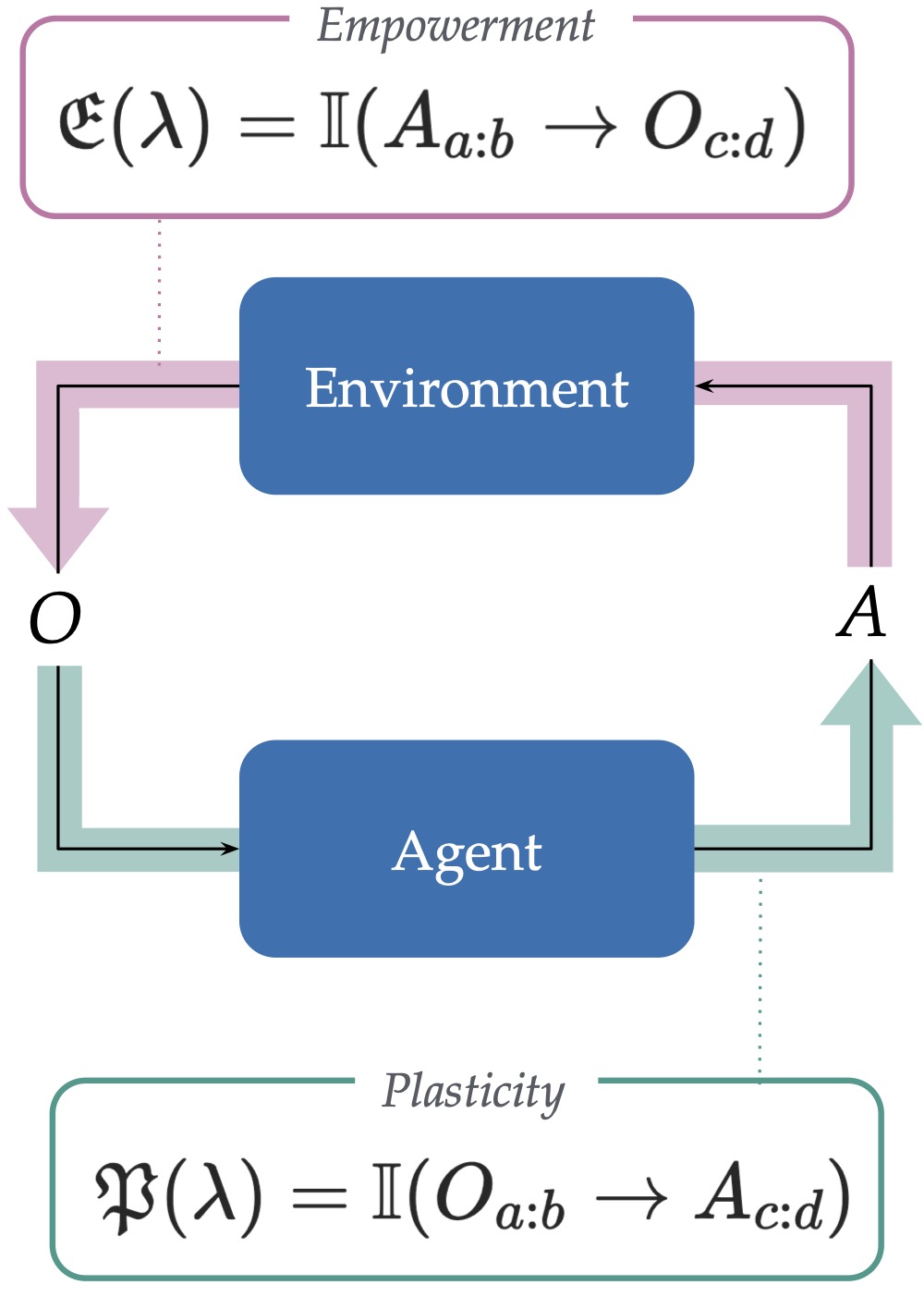

Plasticity as the Mirror of Empowerment

NeurIPS 2025

We propose an agent-centric measure for plasticity, and highlight a new connection to empowerment.

Joint work with Michael Bowling, André Barreto, Will Dabney, Shi Dong, Steven Hansen, Anna Harutyunyan, Khimya Khetarpal, Clare Lyle, Razvan Pascanu, Georgios Piliouras, Doina Precup, Jonathan Richens, Mark Rowland, Tom Schaul, Satinder Singh.

|

|

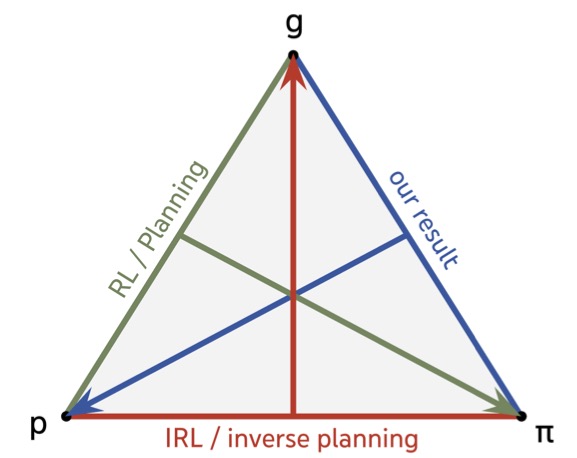

General Agents Contain World Models

ICML 2025

We prove that any agent that can solve a sufficiently rich set of goal-directed tasks must contain a predictive model of the environment.

|

|

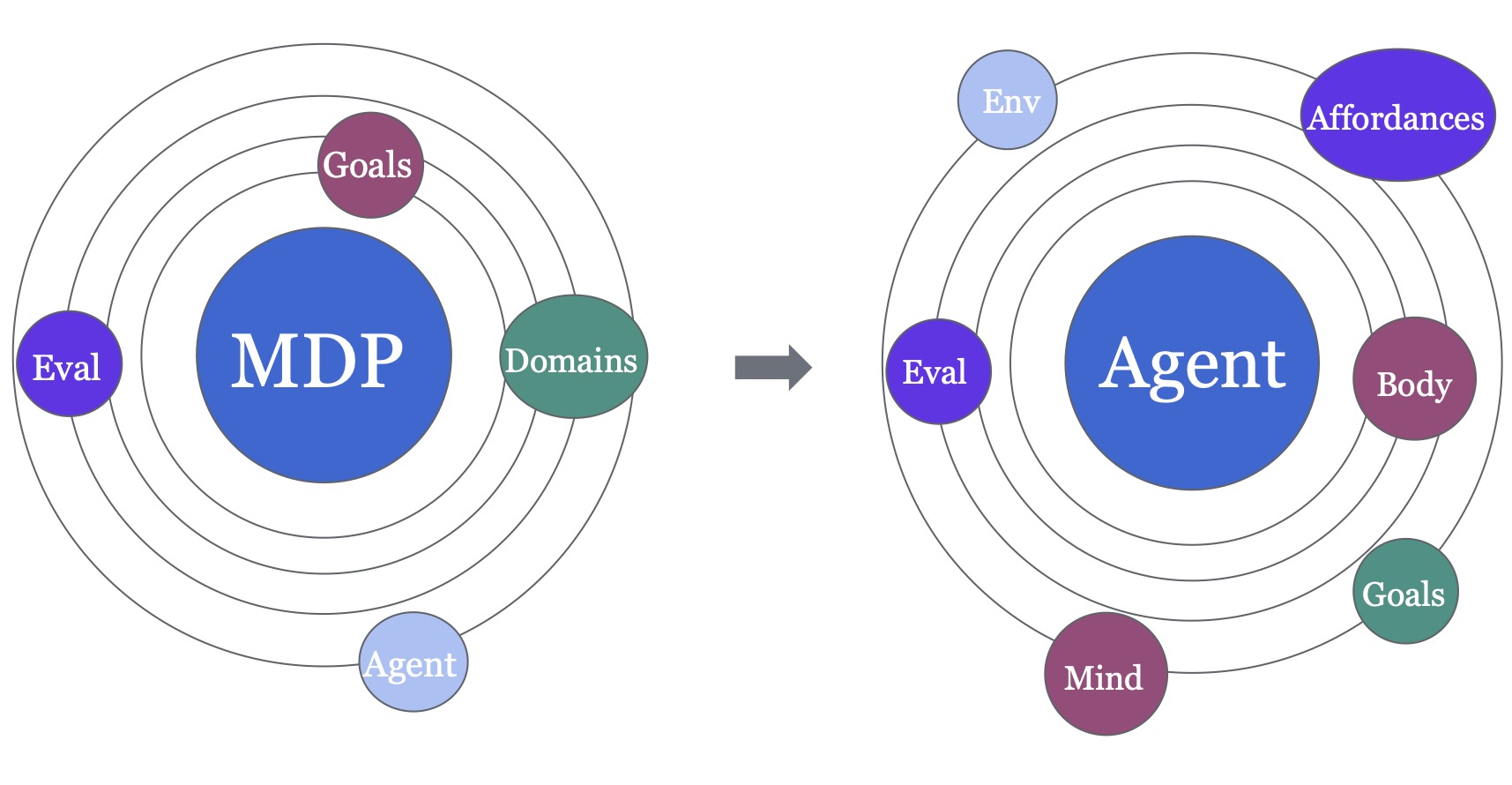

We reflect on the paradigm of RL and suggest three departures from our current thinking.

Joint with Mark Ho and Anna Harutyunyan.

|

|

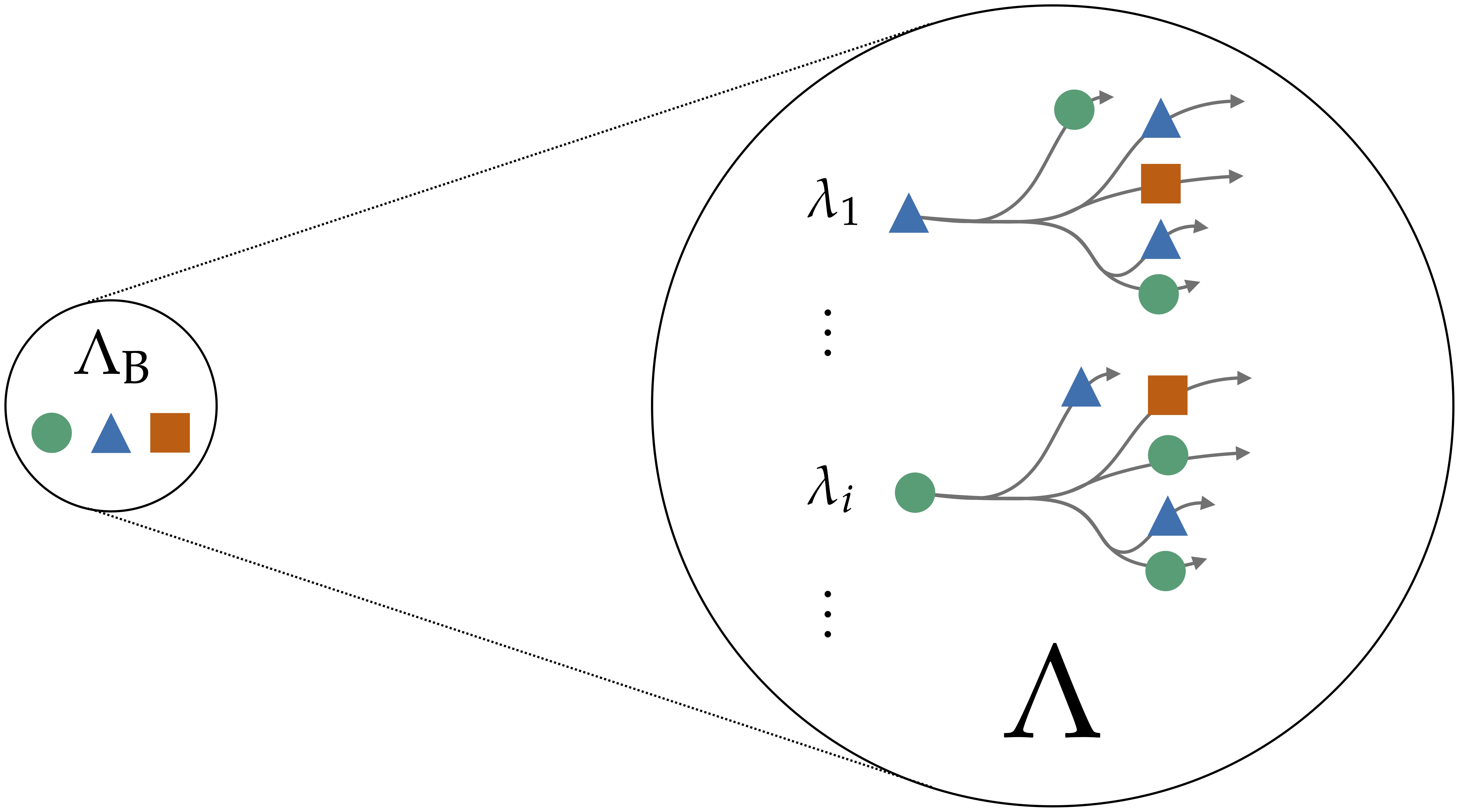

A Definition of Continual Reinforcement Learning

NeurIPS 2023

We present a precise definition of the continual reinforcement learning problem.

|

|

Settling the Reward Hypothesis

ICML 2023

We illustrate the implicit requirements on goals and purposes under which the reward hypothesis holds.

|

|

We develop a new theory describing how people simplify and represent problems when planning.

Led by Mark K. Ho, joint with Carlos G. Correa, Jonathan D. Cohen, Michael L. Littman, Thomas L. Griffiths.

|

|

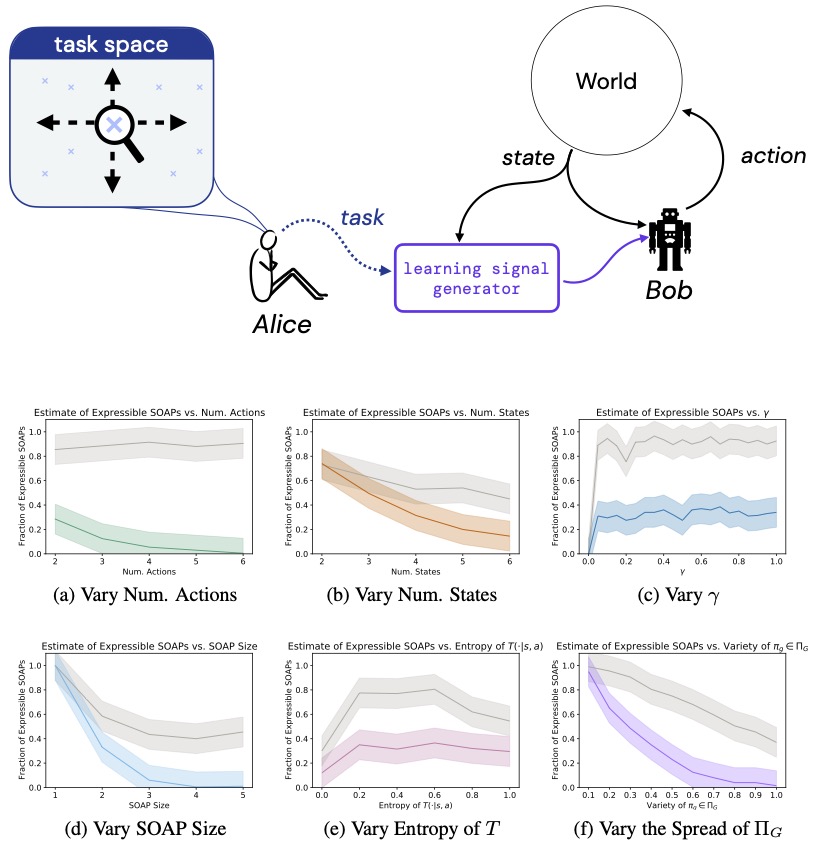

On the Expressivity of Markov Reward

NeurIPS 2021 (Outstanding Paper Award)

We study the expressivity of Markov reward functions in finite environments by analysing what kinds of tasks such functions can express.

Joint work with Will Dabney, Anna Harutyunyan, Mark K. Ho, Michael L. Littman, Doina Precup, Satinder Singh.

|

|

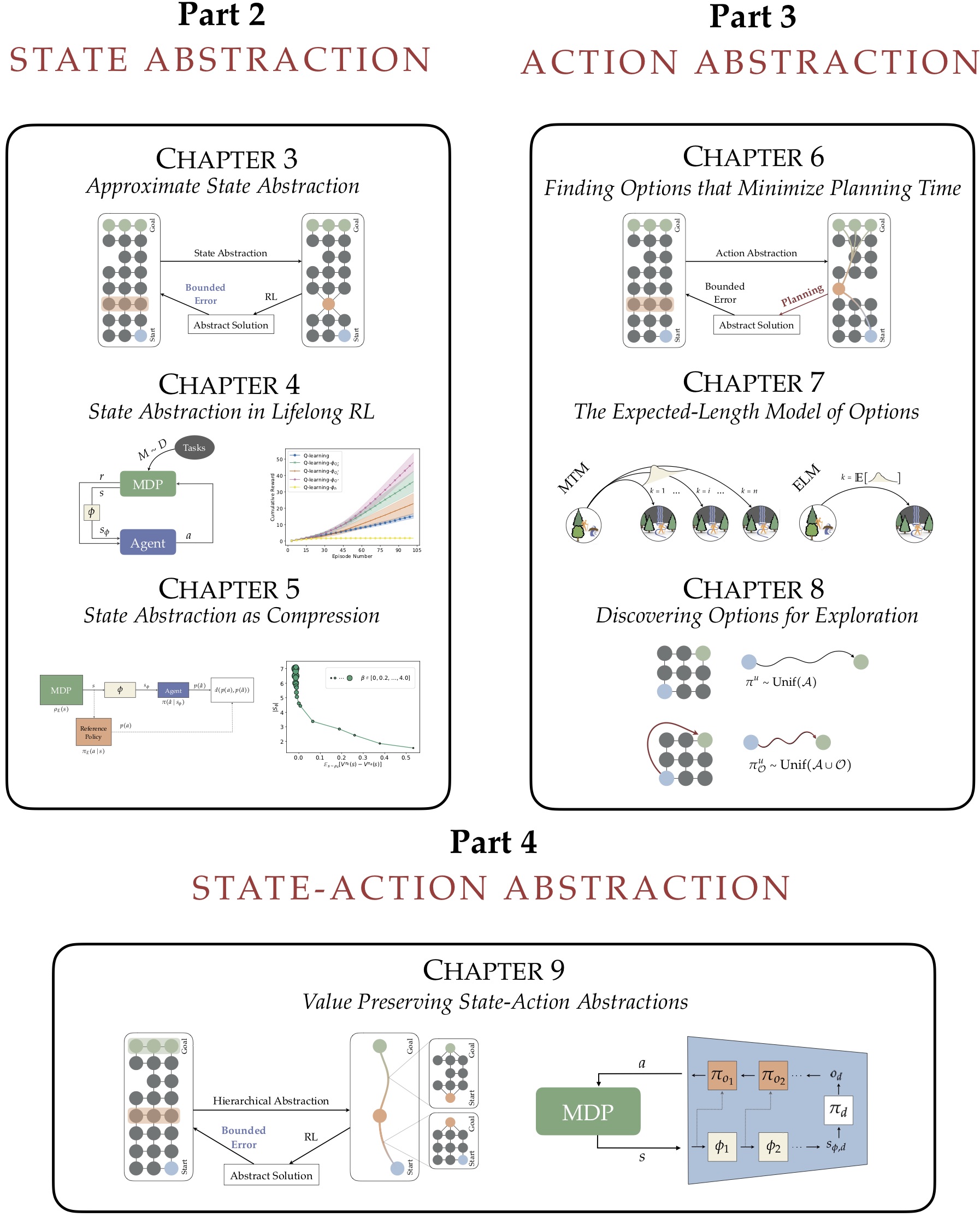

A Theory of Abstraction in Reinforcement Learning

Ph.D Thesis, 2020

My dissertation, aimed at understanding abstraction and its role in effective reinforcement learning.

Advised by Michael L. Littman.

|

|

The Value of Abstraction

Current Opinions in Behavioral Science 2019

We discuss the vital role that abstraction plays in efficient decision making.

|

|

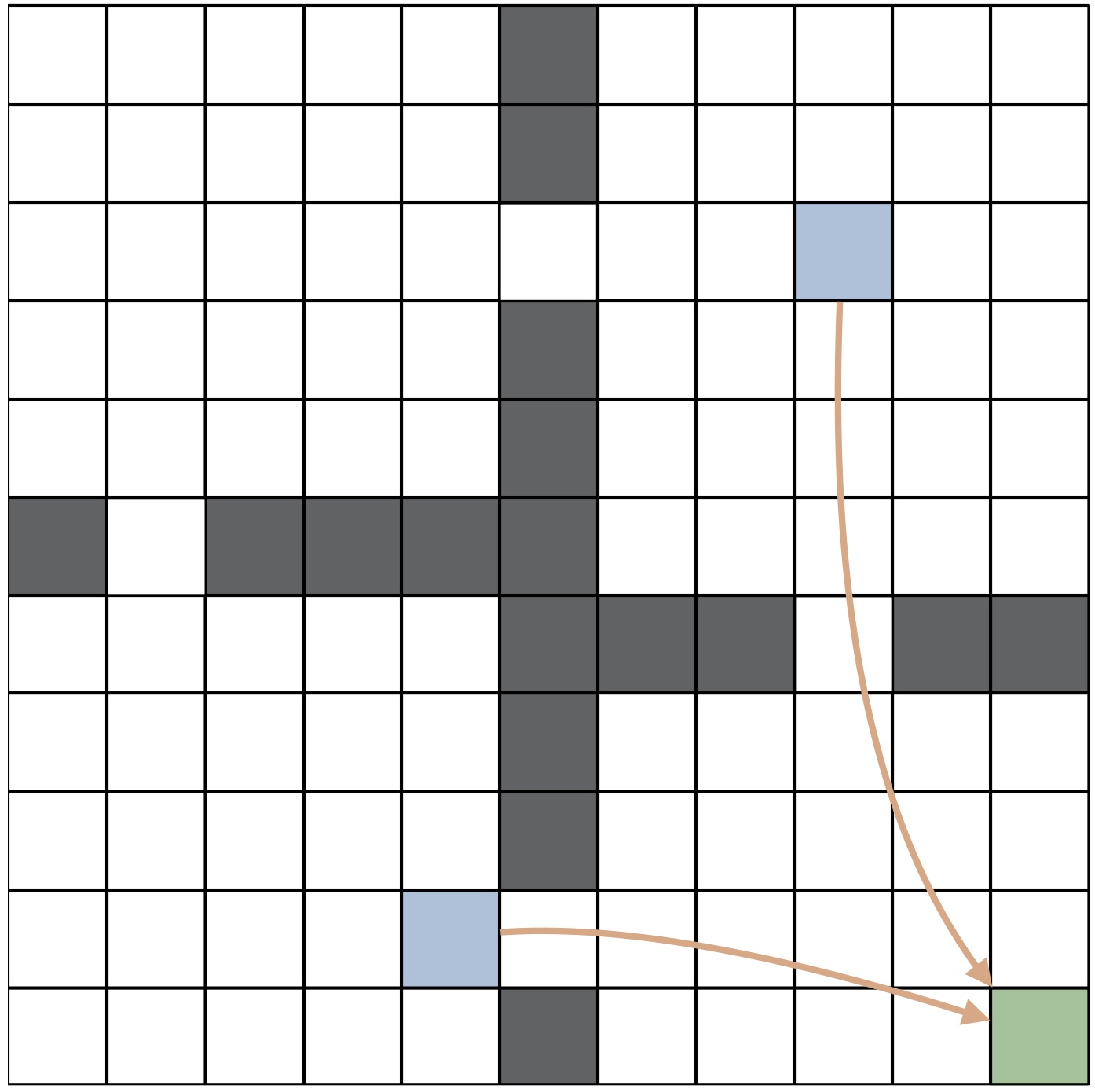

We prove that the problem of finding options that minimize planning time is NP-Hard.

|

Before joining DeepMind, I completed my Ph.D in Computer Science at Brown University where I was fortunate to be advised by Prof. Michael Littman. I got my start in research working with Prof. Stefanie Tellex at Brown, and before that studied Philosophy and Computer Science at Carleton College.

I'm a big fan of basketball, baking, reading, lifting, games, philosophy, and music--I play violin and guitar, and love listening to just about everything. I live in Edinburgh, Scotland with my wife Elizabeth and our dog Barley.

Q: What should I call you? A: I usually go by "Dave", but I take no offense to "David". If I'm teaching your class, "Dave" / "Professor Dave" / "Professor Abel" are all okay.

If you want to arrange a call with me for any reason, I have a recurring open slot in my calendar here.

I welcome feedback of all kinds: please feel free to fill out this anonymous feedback form.